$$P(X_n=j \mid X_{n-1}=i_{n-1},\cdots,X_0=i_0)=P(X_n=j \mid X_{n-1}=i_{n-1}).$$

We call such a sequence a Markov chain. If the chain is homogenous (that is, the probability of changing from one state to another is independent of $n$), probability theory makes it clear that

$$P(X_1=j)=\sum_{i=1}^nP(X_1=j \mid X_0=i)P(X_0=i).$$

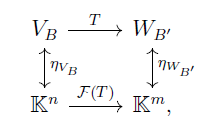

If we let the matrix $P$ be defined as the matrix with entries $p_{j i}:=P(X_1=j \mid X_0=i)$ and $v_i^k=P(X_k=i)$, then the above equation translates to

$$P (v^0)=v^1.$$

The condition of being a Markov chain then implies that

$$P^n(v^0)=v^n.$$

Probabilistically speaking, this tells us that if we know the initial probability distribution $v^0$, then we know the probability distribution of the $n$-th random variable, given by $v^n$. Note that there is an obvious condition for $P$ to be related to a probability: we must have that $\sum\limits_{j=1}^n p_{ji}=1$ for every $i \in A$ (that is, the elements of each column must sum to $1$). We note that the awkwardness of the exchanged positions of $i,j$ is why some books prefer to do transposed calculations.

It is of obvious interest to known what happens assymptotically. For instance, if

$$\lim_{n \to \infty} P^n(v^0)$$

exists for some $v^0$, then the distribution of probability after $n$ steps is reaching an equilibrium. Let's given an example. Consider that $X_n$ is $2$ if it rains or $1$ if it does not rain, and we have that $p_{2,2}=\frac{1}{3}$, $p_{1,2}=\frac{2}{3}$, $p_{2,1}=\frac{1}{4}$, $p_{1,1}=\frac{3}{4}$. That is, we have the matrix

$$P=\begin{pmatrix}

3/4 & 2/3 \\

1/4 & 1/3

\end{pmatrix}.$$

It may be a good idea to interpret $p_{i,j}$ (for example: $p_{2,1}$ is the probability that it will rain given that it did not rain the day before). A simple computation yields eigenvalues $\lambda_1=1,\lambda_2=\frac{1}{12}$ to the matrix. Therefore, we have two linearly independent eigenvectors $v_1,v_2$, and the matrix is diagonalizable. Since they are linearly independent, any vector $v \in \mathbb{R}^2$ is $v=c_1 v_1+c_2 v_2$. Note then that

$$A^n(v)=A^n(c_1v_1+c_2v_2)=c_1v_1+\lambda_2^nc_2v_2 \to c_1 v_1$$

as $n \to \infty$. Therefore, every vector which is not a constant multiple of $v_2$ converges to a multiple $v_1$, while the rest converges to $0$. Therefore, the eigenvector associated to $1$ is ``stationary"{}. Probabilistically, if the initial distribution is generic (explicitly, is not a multiple of $v_2$), it will converge to $v_1$.

It is also obvious that eigenvectors play an important role. Note, for instance, that if $P^n v$ converges, then it converges to an eigenvector. In fact, if $v'=\lim P^n v$,

$$P P^n v=P^{n+1} v \stackrel{n \to \infty}{\implies} P v'=v'.$$

With this initial discussion motivating our problem, we come to the definitions.

Definition: A probability matrix is a square matrix $P=(p_{ij})$ such that $p_{ij}\geq 0$ for every $i,j$ and $\sum\limits_{i=1}^n p_{ij}=1$. A strict probability matrix is a probability matrix such that $p_{ij}> 0$ for every $i,j$.

Note that we have exchanged back the comfort of indices, since we left the probability realm and there will be no confusion with interpretation of the multiplication and the notation, since there will simply be no interpretation.

Definition: A probability vector is a vector $x \in \mathbb{R}^n$ such that $x_i \geq 0$ for every $i$ and $\sum\limits_{i=1}^n x_i=1$. A strict probability vector is a probability vector such that $v_i>0$ for every $i$.

Our intention is to show this small, but already powerful, part of the Perron-Frobenius theorem:

Theorem:[(Part of) Perron-Frobenius] Given a strict probability matrix $P$, then there exists a unique probability eigenvector, and it is a strict probability eigenvector.

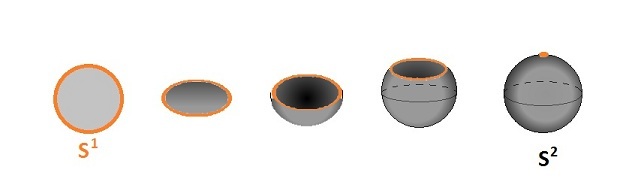

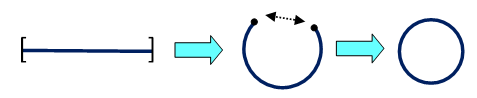

Recall the $\ell^1$ norm on $\mathbb{R}^n$: $\Vert x \Vert_1:=\sum_{i} |x_i|.$ Note that $x$ is a probability vector if and only if $\Vert x \Vert_1=1$ and $x_i \geq 0$ for every $i$. We call the space of probability vectors on $\mathbb{R}^n$ by $\mathcal{P}_n$, and the space of strict probability vectors on $\mathbb{R}^n$ by $\mathcal{P}_n^+$. Note that $\mathcal{P}_n$ is a simplex, and $\mathcal{P}_n^+$ is the intersection of a simplex with an open octant (the interior of the simplex, if viewed as a manifold with boundary).

Simple computations yield that if $v \in \mathcal{P}_n$ and $P$ is a probability matrix, then $Pv \in \mathcal{P}_n$, and also that a probability eigenvector must necessarily be of eigenvalue $1$.

Proof: We first show uniqueness of the probability eigenvector if it exists. For that, suppose that there were two probability eigenvectors. Then, they would form a two-dimensional subspace of eigenvectors. In particular, there would be an eigenvector living on the boundary of $\mathcal{P}_n$. But if $P$ is a strict probability matrix, then a probability eigenvector must necessarily be a strict probability eigenvector, since the sums which form the components of the eigenvector will always have a non-zero summand. This is a contradiction.

Note that the above argument is quite geometrical, whereas the next one is clearly topological.

To show existence, consider the function $f: \mathcal{P}_n \to \mathcal{P}_n$ given by $x \mapsto Px$. Since $\mathcal{P}_n$ is a simplex, Brouwer's fixed point theorem applies to yield that there exists an eigenvector. As we have seen above, it must be a strict probability vector, and this ends the proof.

RSS Feed

RSS Feed